A container is a lightweight, standalone, executable package of software that includes everything needed to run a piece of software: code, runtime, system tools, system libraries, and settings. This technology is part of a broader trend known as containerization, a method of packaging software so it can be run with its dependencies isolated from other processes.

This article explores what containerization is, the key components of containers, and its differences from virtual machines (VMs). The sections below will also touch on the benefits, use cases, and popular container technologies. Lastly, this article will look into potential challenges and future trends in containerization.

Table of Contents

Understanding containers

Containers allow developers to package and run applications in isolated environments, a process known as containerization. This technology provides a consistent and efficient means of deploying software across different environments, from a developer’s local workstation to production servers, without worrying about differences in OS configurations and underlying infrastructure.

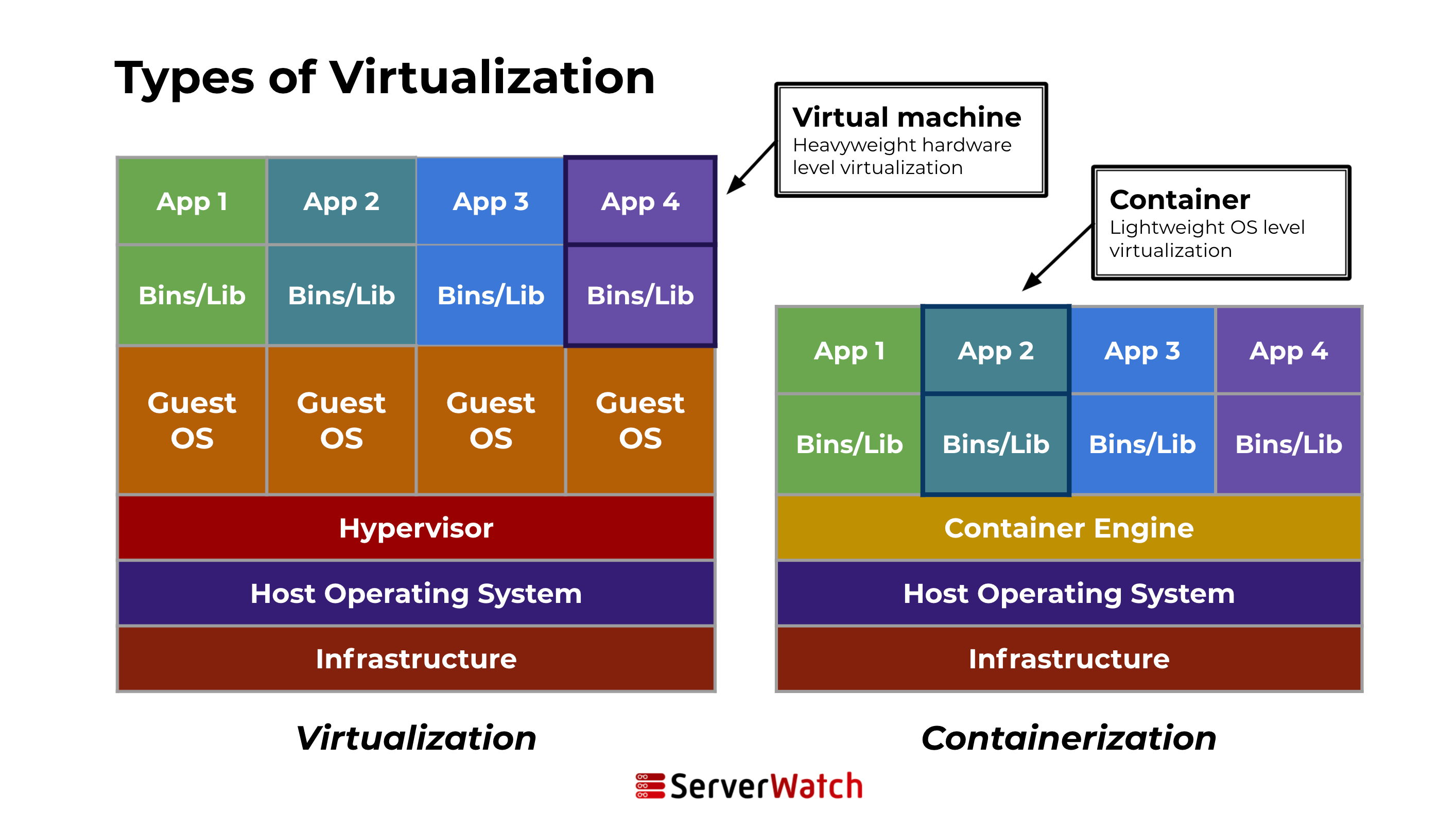

Unlike traditional deployment methods, containers encapsulate an application and its dependencies in a container image. This image includes everything the application needs to run: code, runtime, libraries, and system tools. Because containers share the host system’s kernel (but maintain their own filesystem, CPU, memory, and process space), they are much lighter and more resource-efficient than virtual machines.

Containers vs. virtual machines

Containers and VMs are both used to provide isolated environments for running applications, but they work in fundamentally different ways. The table below summarizes the differences between containerization and virtualization.

| Architecture | Resource management | |

|---|---|---|

| Containers | Containers share the host system’s kernel but isolate the application processes from the system. They do not require a full OS for each instance, making them more lightweight and faster to start than VMs. | Containers are more efficient and consume fewer resources than VMs because they share the host system’s kernel and only require the application and its runtime environment. |

| Virtual machines | A VM includes not only the application and its dependencies but also an entire guest OS. This OS runs on virtual hardware powered by a hypervisor, which sits atop the host’s physical hardware. VMs are isolated from each other and from the host system, providing a high degree of security and control. | The need to run a full OS in each VM means they consume more system resources, which can lead to less efficient utilization of underlying hardware. |

How does containerization work?

Containerization involves encapsulating an application in a container with its own operating environment. This process involves several steps:

- Creating a container image: This image is a lightweight, standalone, executable package that includes everything needed to run the application — code, runtime, libraries, and settings.

- Running the image: When the image is executed on a container engine (like Docker), it runs as an isolated process in user space on the host OS.

- Isolation and resource allocation: Containers run in isolated spaces, using namespaces and cgroups in Linux, which ensure that they do not interfere with each other or with the host system. This isolation also allows for resource allocation to be managed per container.

Key components of a container

Several key components make up a container:

- Container engine: This is the core software that provides a runtime environment for containers. Examples include Docker and rkt. The engine creates, runs, and manages the lifecycle of containers.

- Container image: This is a static file that includes all the components needed to run an application — code, runtime, system tools, libraries, and settings.

- Registry: This is a storage and content delivery system, holding container images. Users can pull images from a registry to deploy containers.

- Orchestration tools: These are tools for managing multiple containers. They help automate the deployment, scaling, and operations of containerized applications. Kubernetes is a prime example of an orchestration tool.

- Namespaces and cgroups: These Linux features are used to isolate containers. Namespaces ensure that each container has its own isolated workspace (including file system, network stack, etc.), and cgroups manage resource allocation (CPU, memory, disk I/O, etc.) to each container.

Container use cases

Containers have a wide range of applications in the modern software landscape, catering to various needs in software development, deployment, and management. Their versatility and efficiency have made them a popular choice for numerous scenarios.

Microservices and cloud-native applications

Containers are inherently suited for microservices, a design approach where applications are composed of small, independent services. Each microservice can be encapsulated in a separate container, ensuring isolated environments, reducing conflicts, and making each service easy to update and scale independently.

In a cloud-native context, containers enable applications to be highly scalable and resilient. They can be easily replicated, managed, and monitored, allowing for efficient load balancing and high availability.

Also, using orchestration tools like Kubernetes, containers can be dynamically managed to ensure optimal resource usage, automated healing, and streamlined scaling in response to demand.

Continuous integration/continuous deployment (CI/CD) pipelines

Containers integrate seamlessly into CI/CD pipelines, allowing for consistent environments from development through to production. This consistency helps in identifying and fixing issues early in the development cycle.

Furthermore, containers can be used to automate testing environments, ensuring that every commit is tested in a production-like environment. This leads to more reliable deployments and a faster release cycle.

Lastly, since containers encapsulate the application and its environment, they ensure that the software behaves the same way in development, testing, staging, and production environments, reducing deployment failures due to environmental discrepancies.

Application packaging and distribution

Containers encapsulate an application and all its dependencies, making it easy to package and distribute software across different environments. The portability of containers means that applications can be run across various platforms and cloud environments without modification.

Additionally, container registries can store multiple versions of container images, allowing easy rollbacks to previous versions if needed. This ability enhances the reliability and stability of application deployment.

13 benefits of containerization

Containerization has become a cornerstone of modern software development and deployment strategies due to its numerous benefits. Here, we’ll explore the key advantages that make containerization an attractive choice for developers, operations teams, and businesses.

- Lightweight nature: Containers require fewer system resources than traditional VMs as they share the host system’s kernel and avoid the overhead of running an entire OS for each application.

- Reduced overhead: The reduced resource needs translate to more applications running on the same hardware, leading to better utilization of underlying resources and cost savings.

- Rapid scaling: Containers can be started, stopped, and replicated quickly and easily. This allows for agile response to changes in demand, facilitating horizontal scaling.

- Ease of management: With orchestration tools like Kubernetes, containers can be automatically scaled up or down based on traffic patterns, system load, and other metrics.

- Uniform environments: Containers provide consistent environments from development through production. This uniformity reduces the “it works on my machine” syndrome and makes debugging and development more efficient.

- Improved security: Containers provide an efficient way to isolate applications and processes, making it harder for attackers to access them even if they gain access to your network or device.

- Pipeline integration: Containers easily integrate into CI/CD pipelines, automating the software release process and reducing manual intervention.

- Process isolation: Containers isolate applications from each other and from the host system. This isolation minimizes the risk of interference and improves security.

- Defined resource limits: Containers can have set limits on CPU and memory usage, preventing a single container from consuming all available system resources.

- Platform independence: Containers encapsulate everything an application needs to run. This makes them portable across different environments — cloud and on-premises — and even across different providers.

- Reduced dependency conflicts: Since containers are self-contained, they minimize conflicts between differing software versions and dependencies.

- Rapid deployment: Containers can be created, deployed, and started in seconds, as opposed to the minutes or hours it might take to provision and boot up a VM.

- Agile development and testing: The fast start-up times facilitate quick iteration and testing, accelerating the development cycle.

Challenges and considerations in containerization

While containerization offers numerous benefits, it also comes with its own set of challenges and considerations. Understanding these is crucial for organizations to effectively implement and manage container-based environments.

Security issues

Just like any layer of technology, containers can introduce several security concerns, such as:

- Container escape vulnerabilities: Containers share the host OS’s kernel, and any vulnerability in the container platform could potentially lead to unauthorized access to the host system.

- Image security: Container images can contain vulnerabilities. If an image is compromised, it can spread issues across every container instantiated from it.

- Network security: Containers often require complex networking configurations. Misconfigurations can expose containerized applications to network-based attacks.

- Resource isolation: While containers are isolated, they are not as fully isolated as VMs. This can lead to potential security risks if a container is breached.

- Compliance and auditing: Ensuring compliance with various regulatory standards can be challenging, as traditional compliance and auditing tools may not be adapted for containerized environments.

Complexity in management

While containerization streamlines many aspects of deploying and running applications, it introduces its own set of complexities in management.

- Orchestration complexity: Managing a large number of containers and ensuring their interaction works seamlessly can be complex, especially in large-scale deployments.

- Monitoring and logging: Efficiently monitoring the performance of containerized applications and maintaining logs for all containers can be challenging.

- Updates and maintenance: Continuous integration and deployment mean frequent updates, which can be difficult to manage and track across multiple containers and environments.

- Skill set requirement: Effective management of containerized environments often requires a specific skill set, including knowledge of container orchestration tools and cloud-native technologies.

Integration with existing systems

Adopting containerization often requires integrating this modern technology with existing systems, a task that presents unique challenges and considerations.

- Legacy systems: Integrating containerized applications with legacy systems can be challenging due to differences in technology stacks, architecture, and operational practices.

- Data management: Managing data persistence and consistency across stateful applications in a containerized environment can be complex.

- Network configuration: Ensuring seamless network connectivity between containers and existing systems, especially in hybrid cloud environments, requires careful planning and execution.

- Dependency management: Containers have dependencies that need to be managed and updated, and ensuring compatibility with existing systems adds an additional layer of complexity.

Popular container technologies

Container technologies have rapidly evolved, offering a range of tools and platforms to facilitate containerization in various environments. Among these, some have stood out for their widespread adoption and robust feature sets.

Docker

Docker is arguably the most popular container platform. It revolutionized the container landscape by making containerization more accessible and standardized. Docker provides a comprehensive set of tools for developing, shipping, and running containerized applications.

Key features:

- Docker Engine: A lightweight and powerful runtime that builds and runs Docker containers.

- Docker Hub: A cloud-based registry service for sharing and managing container images.

- Docker Compose: A tool for defining and running multi-container Docker applications.

- Docker Swarm: A native clustering and scheduling tool for Docker containers.

Benefits:

- Ease of use: Docker’s simplicity and user-friendly interface have contributed to its widespread adoption.

- Community and ecosystem: A large community and a rich ecosystem of tools and extensions enhance Docker’s capabilities.

- Cross-platform support: Docker runs on various platforms, including Windows, macOS, and Linux, facilitating cross-platform development and deployment.

Kubernetes

Kubernetes, often abbreviated as K8s, is an open-source system for automating the deployment, scaling, and management of containerized applications. It is designed to work with a range of container tools, including Docker.

Key features:

- Automated scheduling: Kubernetes automatically schedules containers based on resource availability and constraints.

- Self-healing: It can restart failed containers, replace, and reschedule containers when nodes die.

- Horizontal scaling: Supports automatic scaling of application containers based on demand.

- Service discovery and load balancing: Kubernetes groups sets of containers and provides them with a DNS name or IP address.

Benefits:

- Scalability and reliability: Ideal for large-scale, high-availability applications.

- Extensive community support: Backed by the Cloud Native Computing Foundation (CNCF), it has strong community and corporate support.

- Rich ecosystem: Integrates with a broad range of tools for logging, monitoring, security, and CI/CD.

Other notable containers

Beyond Docker and Kubernetes, the following container technologies are also available to developers:

- Rkt (pronounced “Rocket”): Developed by CoreOS, rkt is known for its security features and integration with other projects like Kubernetes. However, it has been deprecated since 2020.

- LXC (Linux Containers): An older technology compared to Docker, LXC is more akin to a lightweight VM and is used for running Linux systems within another Linux host.

- Mesos and Marathon: Apache Mesos is a distributed systems kernel, and Marathon is a framework on Mesos for orchestrating containers, providing an alternative to Kubernetes.

- OpenShift: Red Hat’s OpenShift is a Kubernetes-based container platform that provides a complete solution for development, deployment, and management of containers, including a range of developer tools and a container registry.

Future trends in containerization

As containerization continues to evolve, it is shaping up to integrate more deeply with emerging technologies and adapt to new standards and regulations. The future trends in containerization are expected to drive significant changes in how applications are developed, deployed, and managed.

Integration with emerging technologies

Containerization is not static; it is rapidly evolving and increasingly intersecting with various emerging technologies. This section explores how containerization is integrating with and revolutionizing cutting-edge fields, from AI to IoT.

- Artificial intelligence and machine learning (AI/ML): Containerization is poised to play a significant role in AI and ML development. Containers offer an efficient way to package, deploy, and scale AI/ML applications, providing the necessary computational resources and environments quickly and reliably.

- Internet of things (IoT): As IoT devices proliferate, containerization can manage and deploy applications across diverse IoT devices efficiently. Containers can provide lightweight, secure, and consistent environments for running IoT applications, even in resource-constrained scenarios.

- Edge computing: With the rise of edge computing, containers are ideal for deploying applications closer to the data source. Their portability and lightweight nature allow for efficient deployment in edge environments, where resources are limited compared to cloud data centers.

- Serverless computing: The integration of containerization with serverless architectures is an emerging trend. Containers can be used to package serverless functions, providing greater control over runtime environments and dependencies.

Evolution of container standards and regulations

As containerization technology matures and becomes more integral to business and IT infrastructure, the evolution of container standards and regulations is becoming increasingly important.

- Standardization efforts: As container technologies mature, there’s a growing need for standardization to ensure compatibility and interoperability among different platforms and tools. Initiatives like the Open Container Initiative (OCI) aim to create open standards for container runtime and image formats.

- Security and compliance regulations: With increased adoption, containers will likely be subject to more stringent security and compliance regulations. This could lead to the development of new best practices and standards for container security, addressing aspects like image provenance, runtime protection, and vulnerability management.

- Data privacy and sovereignty: As containers are used more frequently to process and store sensitive data, compliance with data privacy laws (like GDPR) and data sovereignty requirements will become increasingly important. This might lead to new tools and practices focused on data protection within containerized environments.

Bottom line: The role of containers will continue to grow

Containers have altered the landscape of software development and deployment, offering unmatched efficiency, scalability, and consistency. As these technologies continue to evolve, containers will likely become even more integral, facilitating innovations and efficiencies in various sectors.

Looking ahead, the potential of containerization is vast. Its ability to seamlessly integrate with future technological advancements and adapt to changing regulatory landscapes positions it as a cornerstone of digital transformation strategies. Organizations that leverage container technologies effectively will find themselves at the forefront of innovation, equipped to tackle the challenges of a rapidly evolving digital world.

Learn more about virtual machines, and get to know the best virtualization companies to pick the one that most suits your needs.